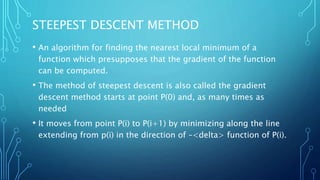

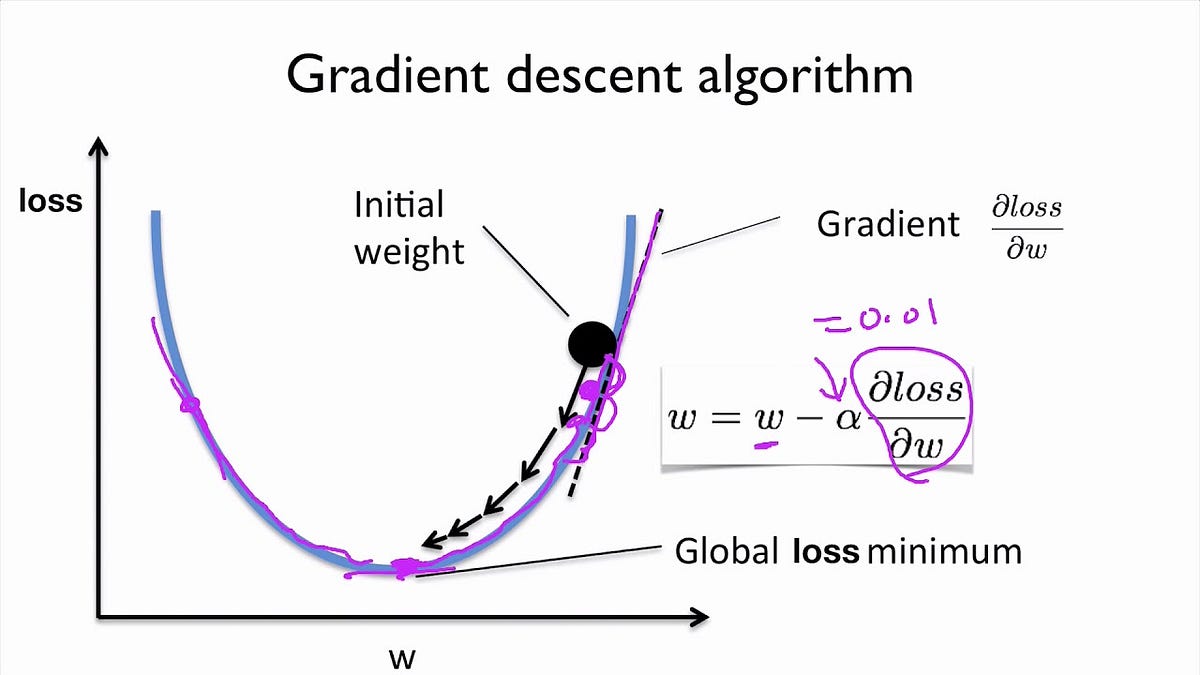

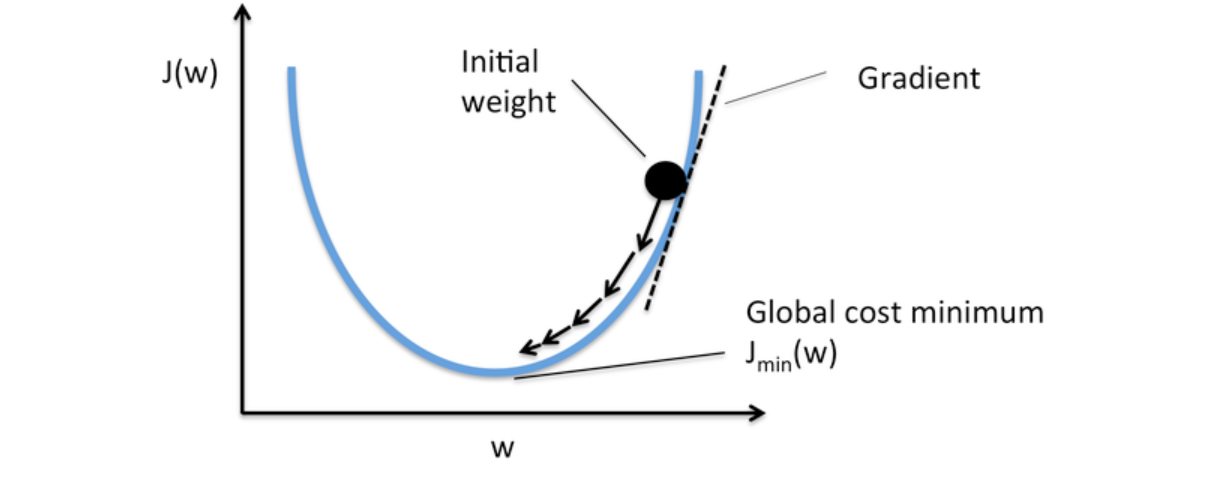

MathType - The #Gradient descent is an iterative optimization #algorithm for finding local minimums of multivariate functions. At each step, the algorithm moves in the inverse direction of the gradient, consequently reducing

Por um escritor misterioso

Descrição

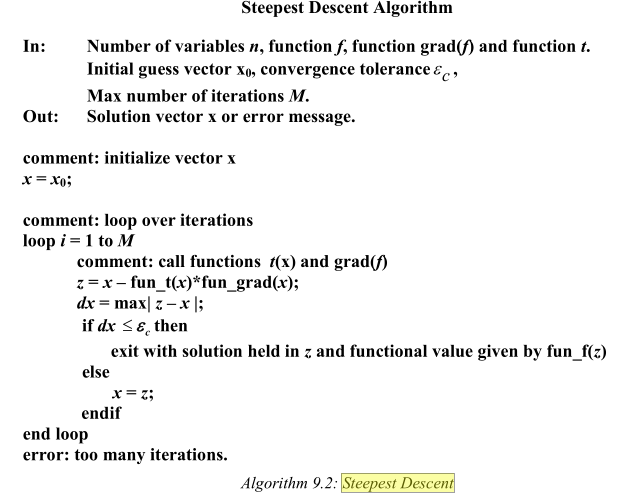

Chapter 4 Line Search Descent Methods Introduction to Mathematical Optimization

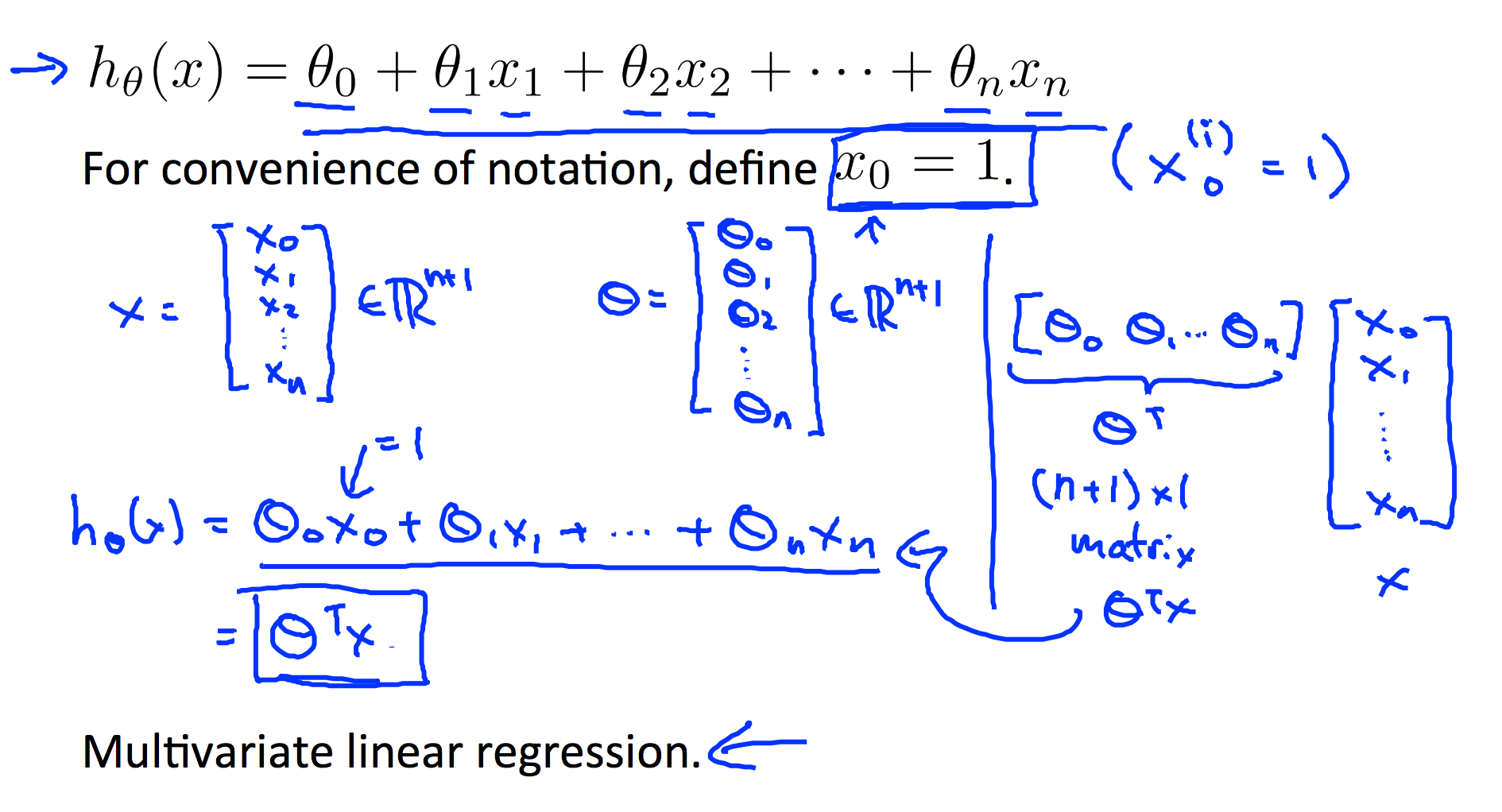

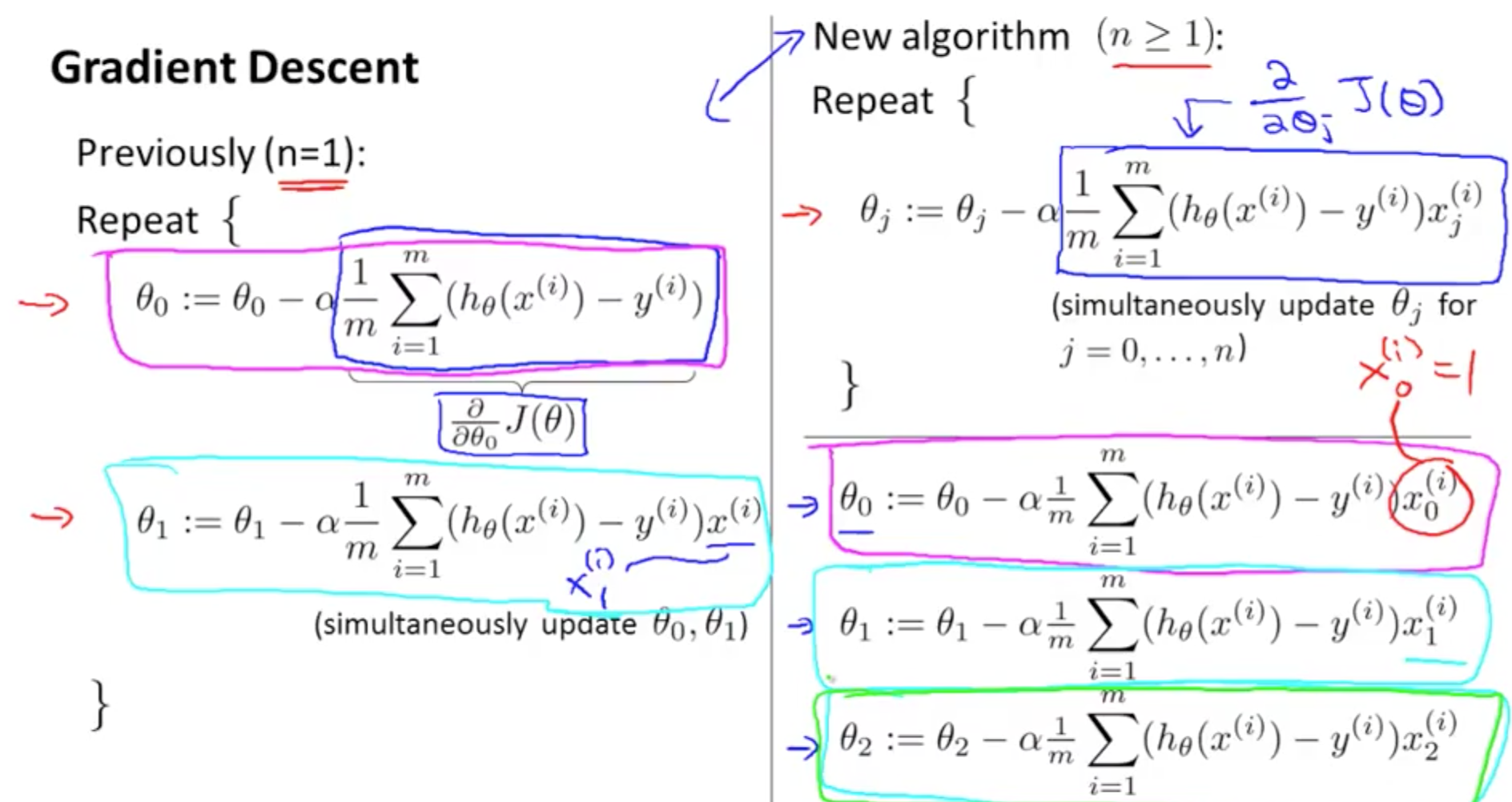

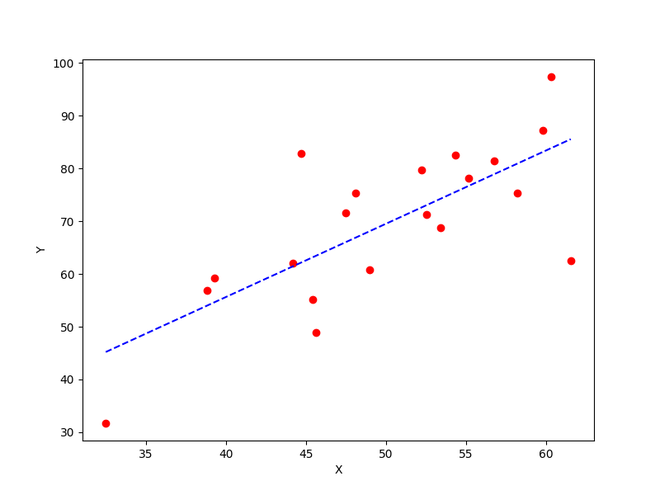

L2] Linear Regression (Multivariate). Cost Function. Hypothesis. Gradient

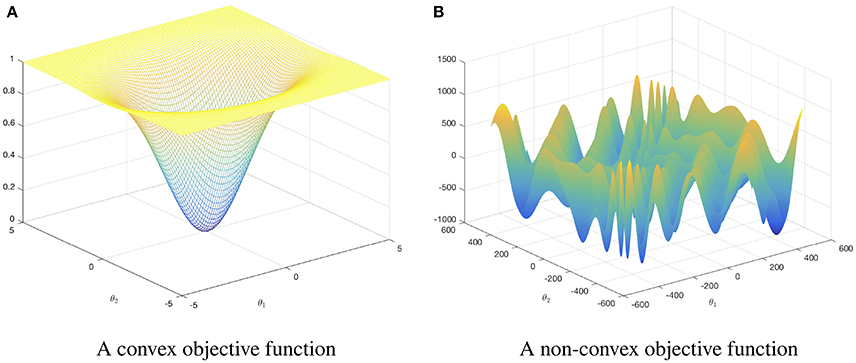

Gradient descent is a first-order iterative optimization algorithm for finding a local minimum of a differentiable function. To find a local minimum of a function using gradient descent, we take steps proportional

Linear Regression From Scratch PT2: The Gradient Descent Algorithm, by Aminah Mardiyyah Rufai, Nerd For Tech

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

Linear Regression with Multiple Variables Machine Learning, Deep Learning, and Computer Vision

Can gradient descent be used to find minima and maxima of functions? If not, then why not? - Quora

GRADIENT DESCENT Gradient descent is an iterative optimization algorithm used to find local minima…, by Kucharlapatiaparna

How to implement a gradient descent in Python to find a local minimum ? - GeeksforGeeks

All About Gradient Descent. Gradient descent is an optimization…, by Md Nazrul Islam

Gradient Descent Algorithm

Solved] . 4. Gradient descent is a first—order iterative optimisation

Implementing Gradient Descent for multilinear regression from scratch., by Gunand Mayanglambam, Analytics Vidhya

de

por adulto (o preço varia de acordo com o tamanho do grupo)